01 Jan 2022

Goal: having a new NAS with more capacity (CPU, RAM and storage).

Steps:

- Spend money on a new NAS (Synology 920+)

- Spend money on 3.5” drives (4x4TB WD Red)

- Spend money on DDR4 stick of ram (16Gb)

- Spend money on a NVME drive for essentials (512Gb 970Pro)

Synology only officially supports 8Gb of RAM on this system but there were stories about the extra RAM possible am I give it a try. So now I have a NAS with 20Gb of RAM.

Synology also does not allow the SSDs NVMEs to function as storage, only as CACHE which was not needed for my workload and I seamed as a challenge.

Steps to reproduce:

Login via SSH and excalate to root:

synopartition --part /dev/nvme0n1 12

mdadm --create /dev/md3 --level=1 --raid-devices=1 --force /dev/nvme0n1p3

After that, nothing really happens until you go on the 3 dotted menu and select “Online Assemble” and voilá, new SSD Volume for fun with docker and machines.

Be aware that Synology does not support this due to the lack of cooling, so keep an eye on temps!

This was done with Synology 7.0.1-42218

AB

28 Mar 2019

Post criado no workshop de GIT em Sintra

AB

14 Apr 2018

Another twist in my life occurred.

Life at Siemens was good and peaceful and that was the problem.

I was becoming frustrated with tons of meetings every day with any pratical outcome and that was making me mad.

It was really annoying to hear in every call “Let’s think over a week to decide”…

Not only that but the projects presented were like “How do I use the cloud?”

As a Siemens colleague once said “I believe that you will become dumb in here” and it was true… I was feeling that I was becoming dumb.

The company where I am now was searching for someone with my profile so they engaged with me to have a litle talk and I accepted.

The conversation went good and the job seemed cool so I accepted the challenge.

After 2 weeks, my time in work flies like never before, in a better environment than in Siemens (didn’t really think that was possible)!

AB

12 Jan 2018

Just run this simple command on the terminal:

dmesg | grep "Kernel/User page tables isolation: enabled" && echo "Cool! Patched! You are on the right way! :)" || echo "Shit, you are still vulnerable :("

AB

10 Jan 2018

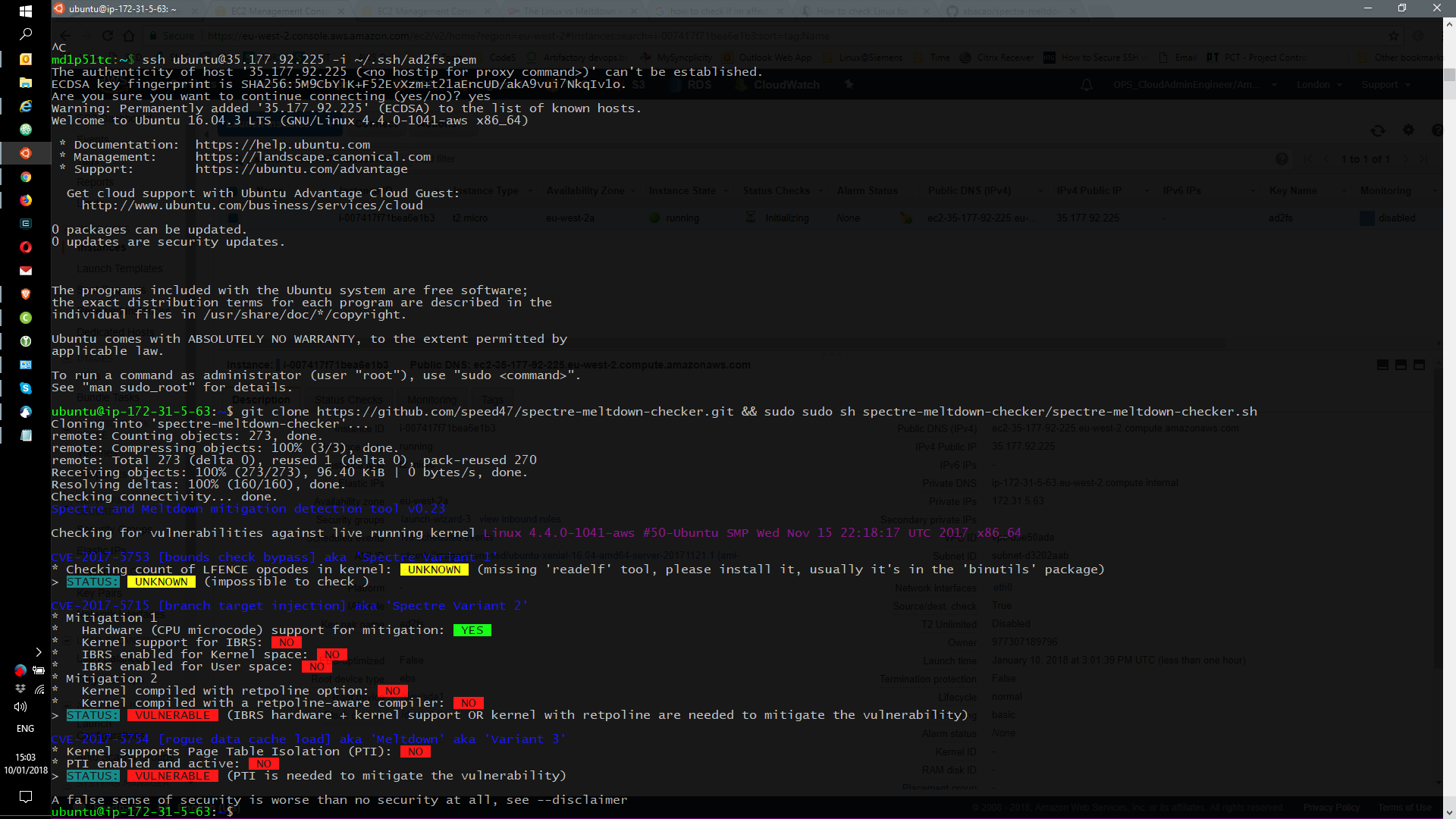

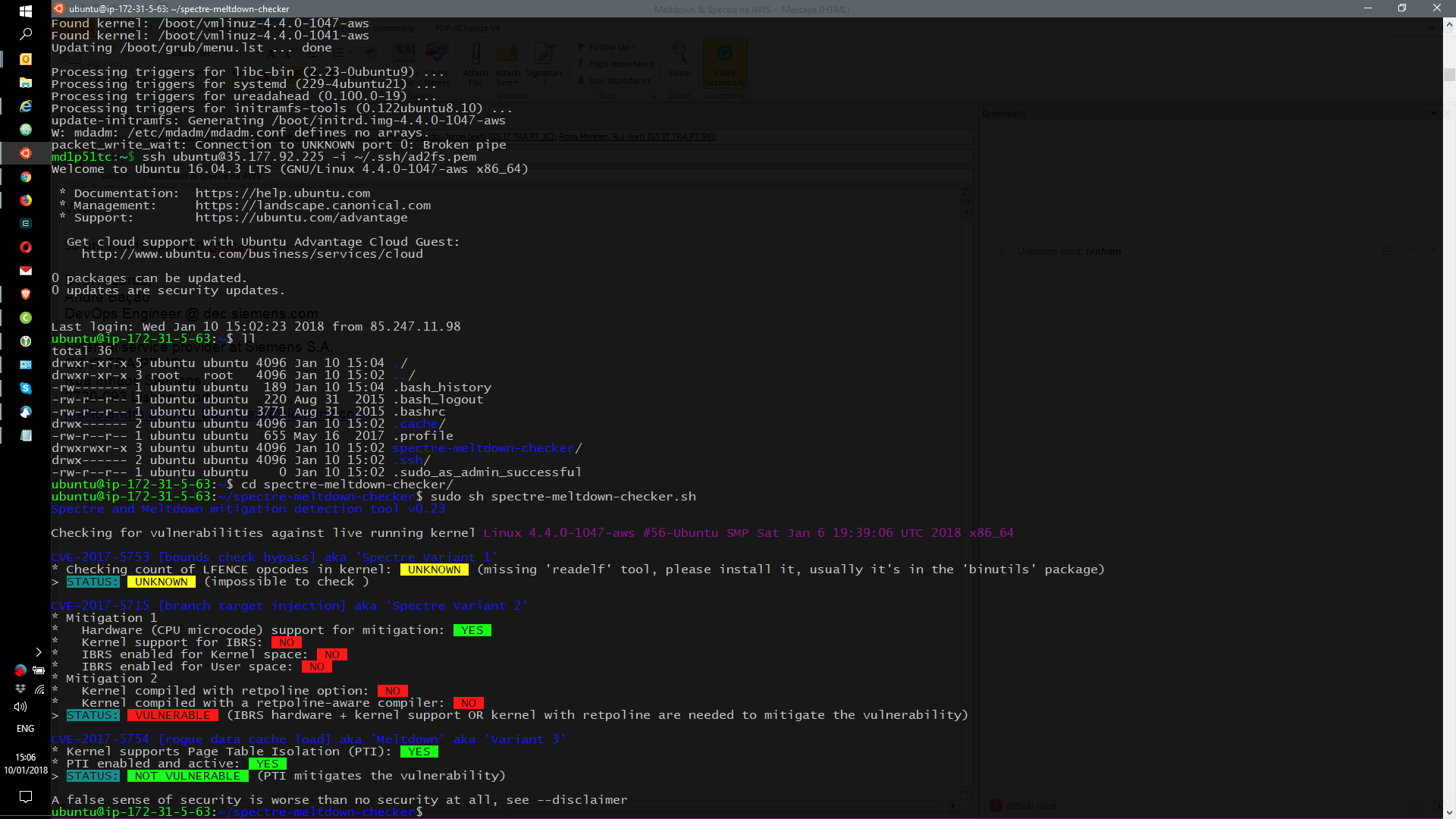

As you might have heard, there are 2 new major vulnerabilities and they are called Meltdown and Spectre.

Both are tie to the CPU manufacturing and to a process of preparing in cache the next cpu instruction leading this to a vulnerability called “speculative execution”

But I’m here just to show some real effects in AWS Cloud.

Everyone that has any instance at AWS should update their machines.

At this moment, new RedHat instances are patched but new Ubuntu instances are not yet patched so they need to be patched ASAP after creation.

It’s not yet the final solution but it patches some stuff.

Please check the pictures provided.

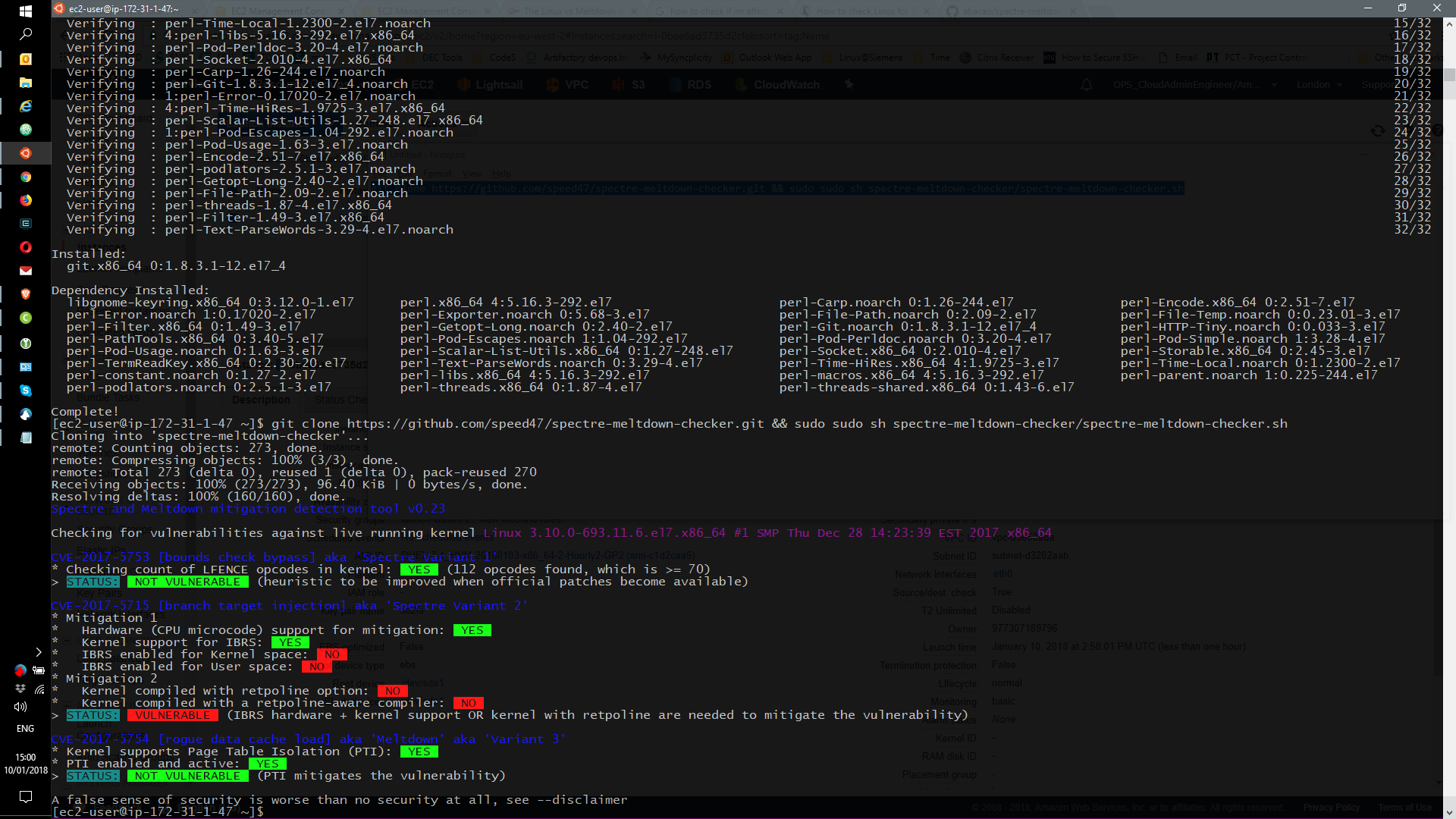

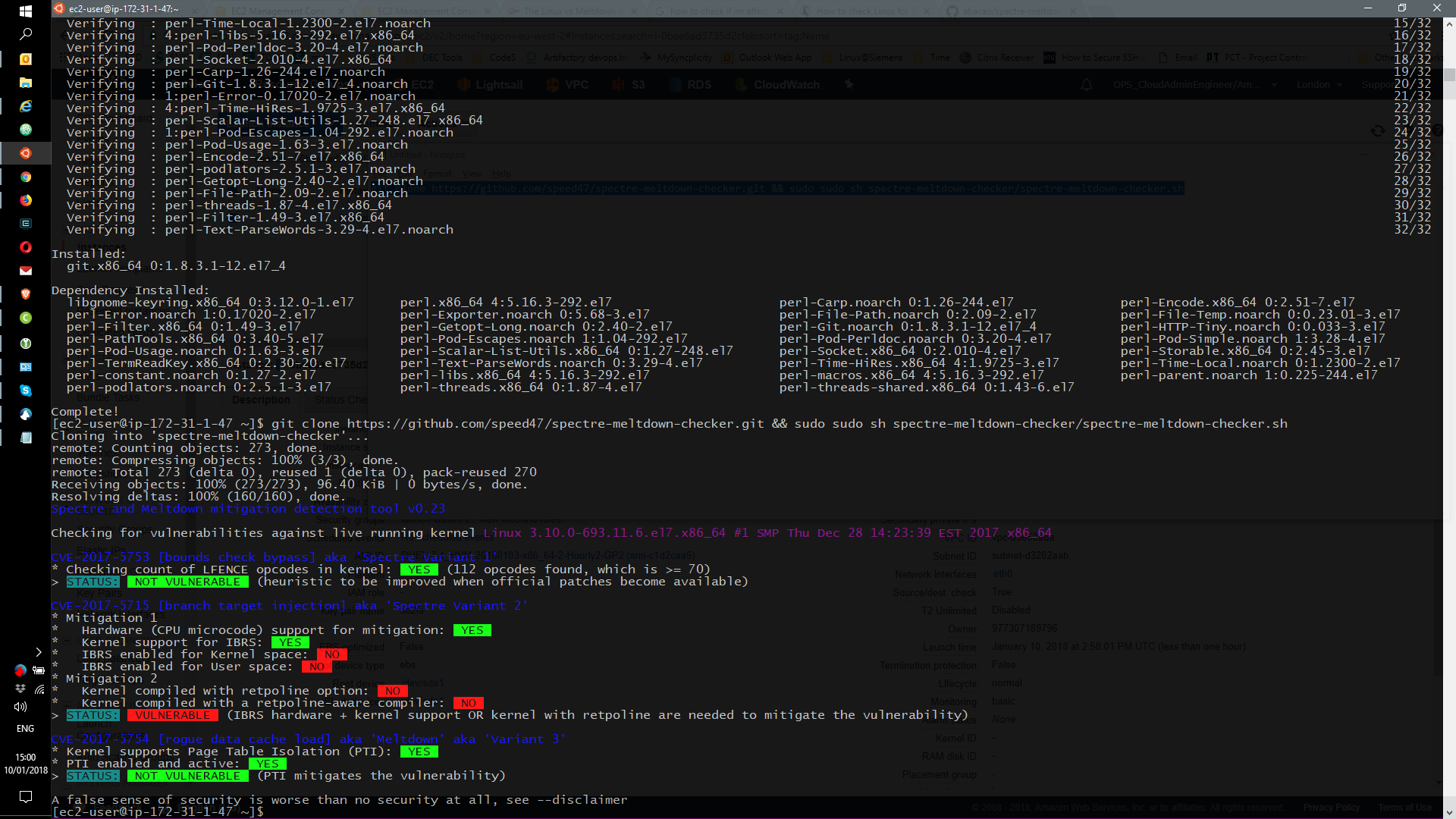

RedHat out-of-the-box (only git was installed)

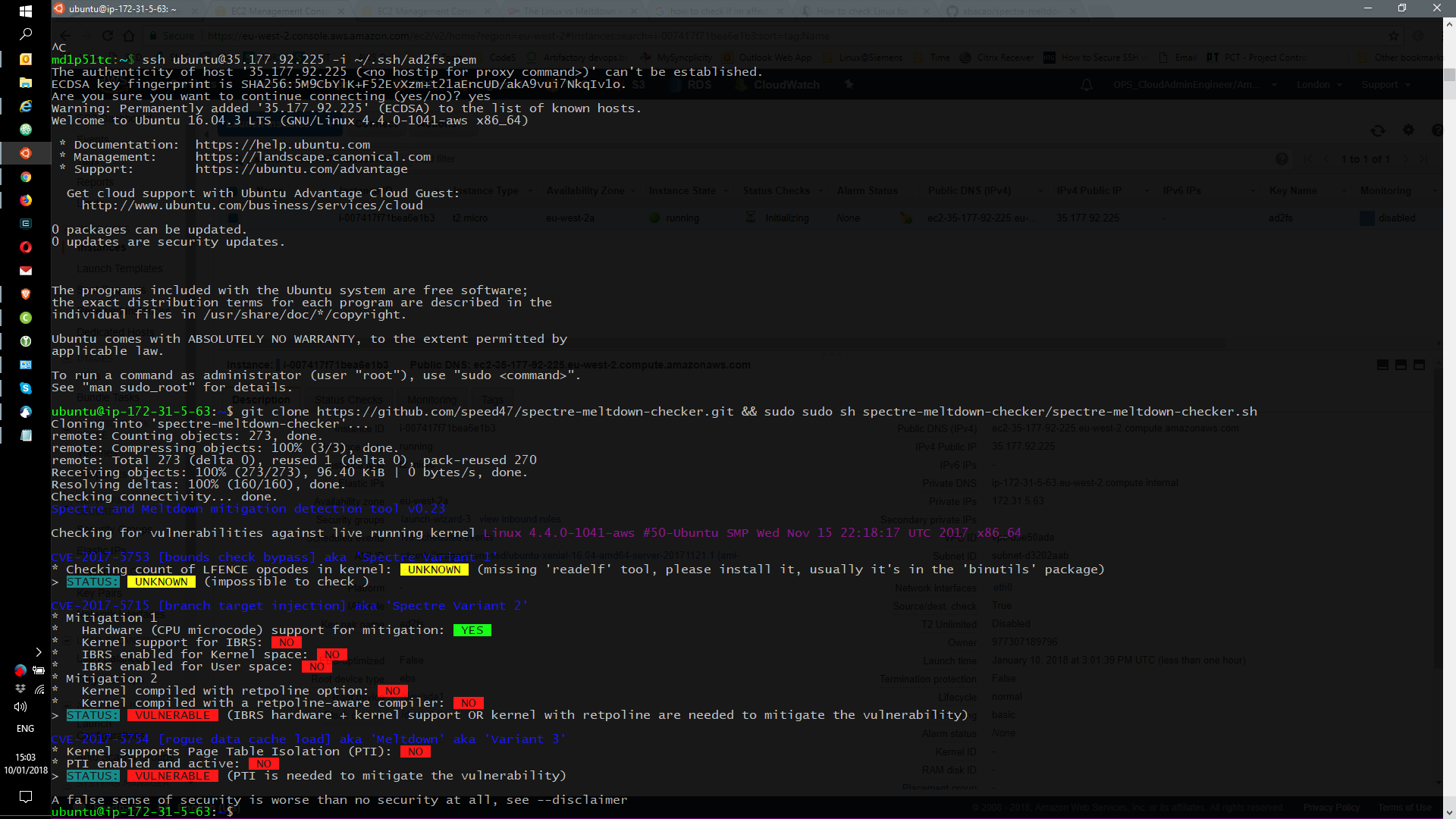

Ubuntu 16.04 straight from AWS

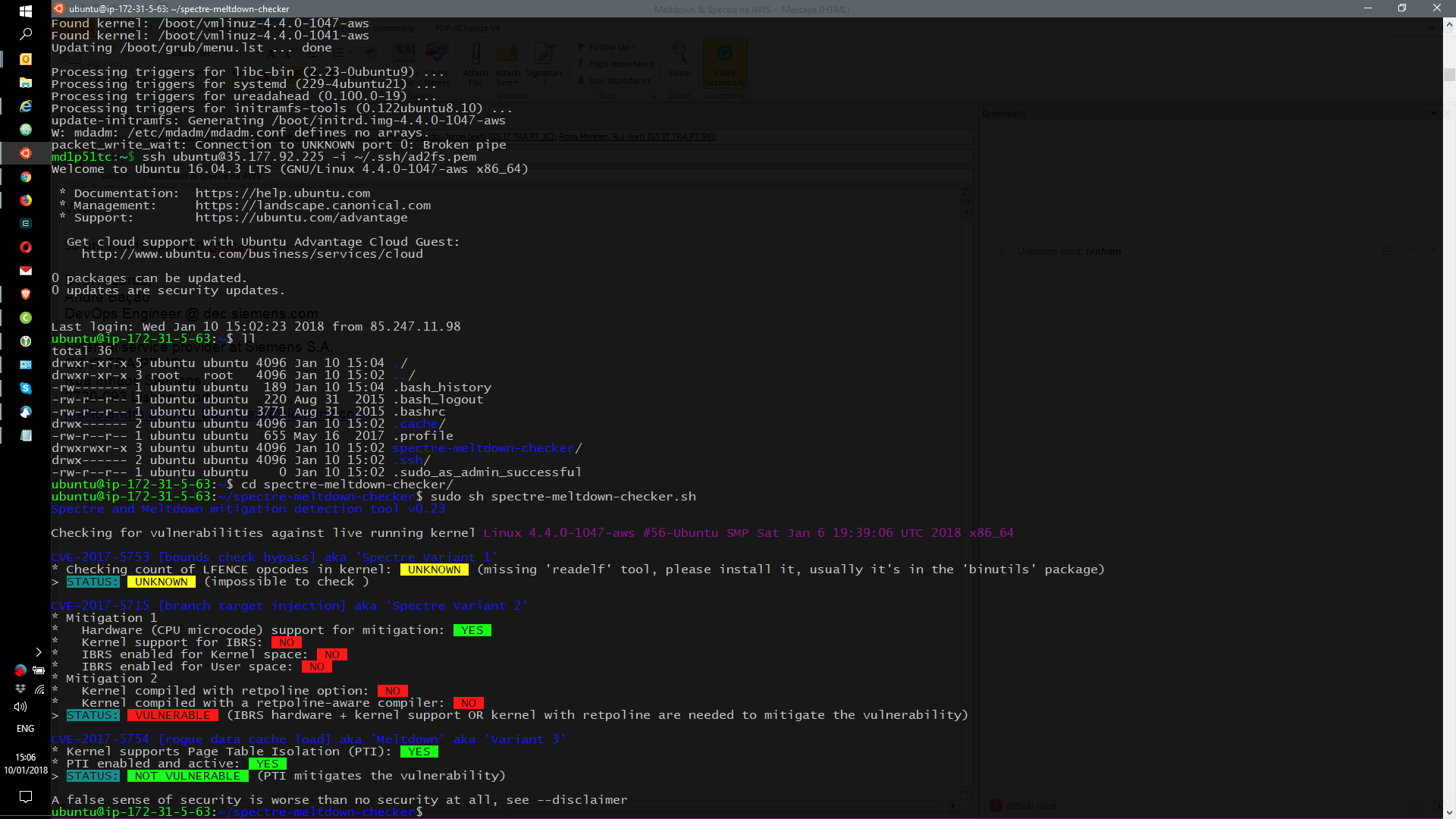

Ubuntu 16.04 updated

There are tons of reading to do if you want.

Some I found that where interesting are:

https://en.wikipedia.org/wiki/Meltdown_(security_vulnerability)

https://en.wikipedia.org/wiki/Spectre_(security_vulnerability)

Ubutu: https://wiki.ubuntu.com/SecurityTeam/KnowledgeBase/SpectreAndMeltdown

RedHat: https://access.redhat.com/security/cve/CVE-2017-5754

AWS: https://alas.aws.amazon.com/ALAS-2018-939.html

Nextcloud: https://nextcloud.com/blog/security-flaw-in-intel-cpus-breaks-isolation-between-cloud-containers/

Git repo used to show the status: https://github.com/abacao/spectre-meltdown-checker

AB